Synthesizing trumpet tones with Marsyas using FM synthesis

We are going to emulate a trumpet tone according to the two operator method described by Dexter Morrill in the first edition of the computer music journal.Prerequisites

To follow this tutorial you will need:- Python - I'm using version 2.7, but 2.6 or 2.5 should work as well

- Marsyas - compiled with the swig python bindings

- marsyas_util - found in src/marsyas_python/ from the Marsyas svn repository

- plot_spectrogram - from the same location

A tutorial on installing Marsyas and swig python bindings can be found here.

I'm also assuming you have some experience with classes in python, and object oriented programming in general.

Lets talk about FM synthesis

FM is short for frequency modulation. This name is great because it literally describes what is taking place, we are modulating the frequency of a signal. In other words, changing the pitch of a tone over time. If you change the pitch back and for fast enough, say at the same rate as an audio signal, it start sounding like one tone consisting of many frequencies.The easiest and most commonly used version of FM synthesis is to have two sine wave generators. One is called the carrier; it is where we get our output from, and the other is called the modulator; it controls the frequency of the carrier.

Both are normally set to be in the audible range, but some neat aliasing effects can be achieved if they are not(this also depends on the sample rate of the system). See this.

The two most import parameters when working with FM synthesis are:

- Modulation Index

- Modulation Ratio

modulation frequency = base frequency x ratio

The modulation index is used to calculate how many hz our signal should be modulated by:

modulation depth = base frequency x modulation index

It is important to note that as our mod index gets higher then three the spectrum starts becoming harder to predict.

Trumpets

To approximate a trumpet tone we need about eight harmonics. Most of the energy is contained around the first and sixth harmonics.One approach to generating these harmonics would be to simply have one FM pair, and have the modulation ratio set high enough to generate eight harmonics.

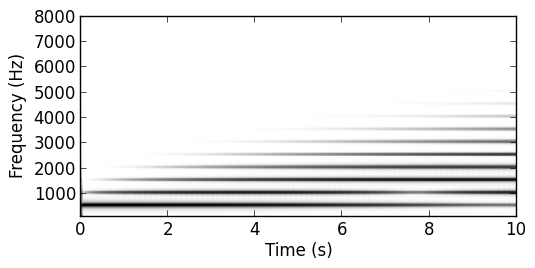

Modulation ratio ramped from 0 to 8

As you can see though as the modulation ratio starts getting higher energy starts getting lost from the fundamental. This doesn't exactly stick with the idea of having most of our energy in the first harmonic. Also, there is not enough energy in the sixth harmonic.

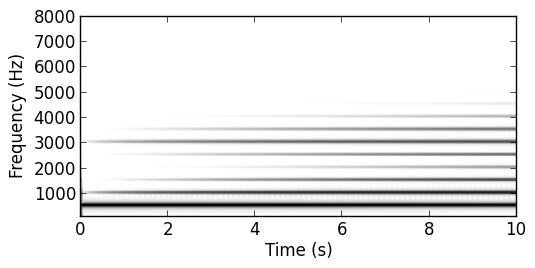

By using two of these pairs one 6 times higher, and keeping the modulation ratio of both less than three we get a much more predictable spectrum.

Osc1 ramped from 0 to 2.66 | Osc2 ramped from 0 to 1.8

This also gives us that extra energy needed around the sixth harmonic.